The Chip Wars Are Getting Weird, and Someone's Lying

So, I’m looking at my screen, trying to connect the dots on three different stories that feel like they’re from alternate universes. In one corner, you have the brute-force American way. Samsung is apparently building a cathedral to the God of More, a massive facility stuffed with 50,000 of Nvidia's priciest GPUs, all to automate chip manufacturing. It’s the classic playbook: if you have a problem, throw a mountain of money and silicon at it until it goes away.

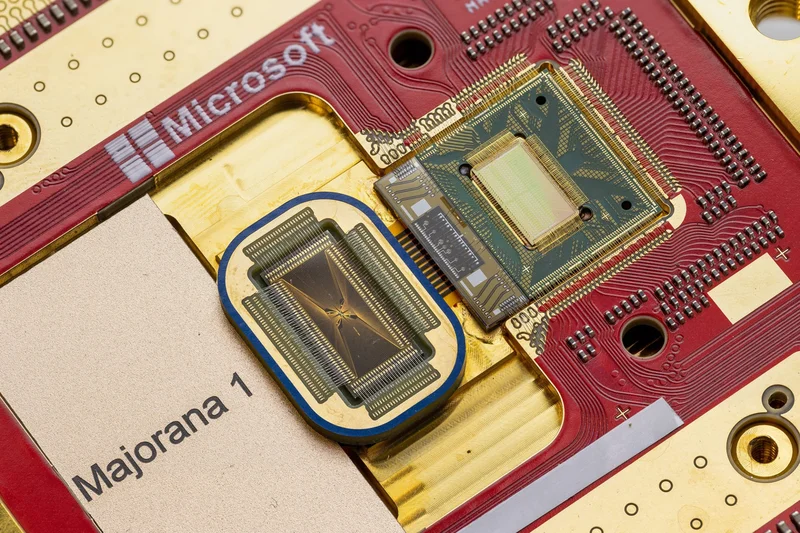

In the other corner, you have a story that reads like pure science fiction. Scientists in China claim they've solved a "century-old problem" with a new analog chip. The headline is as bold as the claim: China solves 'century-old problem' with new analog chip that is 1,000 times faster than high-end Nvidia GPUs. You know, the kind of computing our grandfathers abandoned. And this little retro wonder is supposedly 1,000 times faster than a top-of-the-line Nvidia H100 GPU while using a fraction of the power.

A thousand times.

Let that sink in. Not 10% faster. Not twice as fast. A thousand times. This isn't an improvement; it's a paradigm shift so massive it borders on the unbelievable. So, what are we supposed to believe here? Is the future a giant warehouse of power-hungry digital monsters, or is it an elegant little analog brain that sips energy like a fine wine? Are we watching a heavyweight boxing match or a magic show?

And more importantly, why is everyone suddenly so desperate for a miracle?

The Dirty Secret Behind the Curtain

Here’s the part nobody wants to put in the glossy press releases. The whole chip industry is choking on its own success. I waded through a sponsored piece from Siemens—you know, the kind of corporate fluff I usually ignore—but a piece on AI in Chip Design: Faster Debugging With Vision AI accidentally told the whole story. They call it the "physical verification bottleneck."

I call it a five-alarm dumpster fire.

Turns out, designing these modern chips has become so mind-bendingly complex that the process is breaking down. We’re talking about uncovering billions of errors in a single design. I can almost picture some poor engineer, fueled by stale coffee, scrolling through a billion lines of error codes just to make sure your next phone doesn't brick itself. This is absurd. No, "absurd" is too clean a word—this is a sign that the entire foundation is cracking.

This ain't your grandma’s chocolate chip cookie recipe, where you just follow the steps. This is more like a recipe with ten million ingredients, written in a dead language, where one misplaced chocolate chip causes a chemical explosion. The industry’s solution? AI, offcourse. Siemens is pushing their "Calibre Vision AI" to sift through this digital garbage heap. They talk about "AI-guided clustering" and "a new productivity curve." You know what that really means? It means the humans can't handle it anymore. We've built a machine so complex that we need another machine just to figure out if the first one works.

And the idea that we can just AI our way out of this mess is... well, it’s a nice story to tell the shareholders. But does anyone really think an algorithm is a substitute for a fundamentally sound design process? When your only solution is to build a smarter shovel to dig yourself out of an ever-deeper hole, maybe it’s time to stop digging.

This is the context for the big fight. The desperation. Samsung’s 50,000 GPUs isn't an act of innovation; it's an act of brute force, an attempt to overwhelm the complexity problem with sheer computational muscle. And China’s analog chip? It’s a Hail Mary pass. It’s them saying, "What if we just stopped playing this broken digital game entirely?" It's a tempting thought. But is that analog chip a genuine game-changer for everything, or is it a specialized tool that's brilliant at one specific task, like matrix inversion, and useless for, say, running your operating system? The paper is conveniently light on those details.

We're All Just Beta Testers Now

Let's be real. This isn't a simple story of America vs. China or digital vs. analog. This is a story about hitting a wall at 200 miles per hour. The old way of making chips, the reliable Moore's Law engine that powered the last 50 years, is sputtering out. Everyone is scrambling, and nobody has the real answer.

One side is doubling down on the old religion, building bigger temples and praying for a miracle from their AI gods. The other is trying to start a new cult based on forgotten technology. I'm not putting my money on either of them. The only thing that’s clear is that the era of easy, predictable progress is over. We’re entering the weird, chaotic, and experimental phase. And we, the people buying the gadgets that run on these things, are the guinea pigs in their global science fair project. Good luck to us all.